2025-04-30 08:27:00 Wed ET

stock market technology facebook covid-19 apple microsoft google amazon data platform network scale artificial intelligence antitrust alpha patent model tech titan tesla global macro outlook machine software algorithm alphabet meta

The global market for mobile cloud telecommunication continues to expand into a widespread economic phenomenon. Through the new mobile cloud infrastructure, the Internet broadens and deepens what can be made digitally feasible from virtual reality (VR) headsets and electric vehicles (EV) to artificial intelligence (AI) and the metaverse. In this positive light, the current cloud infrastructure can help facilitate a new wave of digital revolutions worldwide. Underneath all of these layers of cloud abstraction, the Internet infrastructure serves as the new foundation of our chosen digital future. In the broader context of digital technological advancements, we help demystify the physical building blocks of the cloud Internet infrastructure worldwide in order to explain how they mold, shape, expand, and constrain digital abstraction. Now many practical Internet uses multiply far beyond the original remit. Specifically, we delve into what the physical layers of cloud abstraction are likely to change for the Internet to remain sustainable both in the physical sense and in the wider sense of global environmental protection.

From the seabed across the Atlantic and Pacific Oceans to data centers in the key cities on the U.S. West Coast and the East Coast, the fiber-optic cables form the backbone of the physical Internet worldwide. Through the fiber-optic cables, almost all Internet traffic flows back and forth day in and day out. From Apple and Google to Meta, Microsoft, and Amazon, several tech titans vertically integrate the Internet by laying out fiber-optic cables, building out data centers in different countries, and further providing cloud services with AI search engines, robots, avatars, and virtual assistants. As the Internet becomes more powerful, it is vitally important for us to better understand its physical and corporate composition. Only by peeling back the multiple layers of digital abstraction can one lay bare the critical foundations of the new Internet. In the Internet, all technological advancements help support the next dual waves of both business model transformations and digital revolutions around the world. These advancements shine fresh light on how AI-driven cloud services help accelerate the new generation of disruptive innovations in trade, finance, and technology. Every new business can become an AI cloud service provider.

As the digital abstraction of everything online, the cloud endeavors to separate the actions of storing, retrieving, and computing on data from the physical constraints of the online world. This abstract concept often obfuscates the user’s ability to see the existence of hardware. To many end users, the cloud serves as a huge virtual drawer in which we can put our digital data for fast and secure online storage and retrieval. Later we can work on, or play with, our data in the cloud anywhere at any time. To many cloud service providers, however, the cloud is profoundly physical. A new survey by the International Data Corporation shows that the world generates about 123 zettabytes or 123 trillion gigabytes of data each year. Now cloud service providers build, design, and secure the physical parts and components of the cloud to keep up as the world produces more data for online storage in the cloud. Many active users sort and crunch their own data from week to week. For each type of data and computational task, there are different kinds of physical data storage with trade-offs among cost, speed, latency, and durability. Like the layers of the Internet, the cloud needs these multiple layers of online data storage to be flexible enough to adapt to any kind of future use.

At the Rutherford Appleton Lab, one of Britain’s largest national scientific research labs, Asterix and Obelix serve as 2 major stewards of massive quantities of data. These data cloud systems robotically manage the largest tape libraries in Europe. Together Asterix and Obelix store, keep, and organize the deluge of scientific data that flows from particle physics experiments at the Large Hadron Collider (LHC) as part of the European Organization for Nuclear Research, in addition to many other sorts of climate and astronomy research. From the scientific research workstreams, the data has dramatically scaled up by orders of magnitude in recent years. Asterix and Obelix form a sizeable chunk of the lab’s own private cloud. Together these 2 major data cloud systems store 440,000 terabytes of data, equivalent to more than a million copies of the-Lord-of-the-Rings films. When a scientist requests data from an experiment, one of several robots zooms horizontally on a set of rails to find the right cabinet, and vertically on a set of rails to find the right tape. This robot scans through the reel in order to find the data specifically for that scientist. High durability and cost-effective energy consumption make tape the mainstream medium of data storage not only for this particular kind of scientific data, but also for big chunks of the cloud at Amazon, Apple, Google, Meta, and Microsoft.

From time to time, the cloud spreads hard-disk drives across multiple data centers around the world. Each data center reads and writes new data mechanically onto a spinning magnetic hard-disk, and this storage is 5 times more cost-effective than flash, the older technology for the online storage of multimedia files such as photos, podcasts, and movies. Even on the side of the cloud service provider, the multiple data centers abstract away the exact physical device for data storage. One special technique for cloud data storage is a redundant array of independent disks (RAID). RAID takes a big bunch of storage hardware devices and then treats them as one virtual storage shed. Some versions of RAID split up a photo into multiple parts so that no single piece of hardware has all of the photo, but instead numerous storage devices have some slightly overlapping fragments of the same photo. Even if some pieces of hardware break (as hardware failures happen all the time), the photo can still be recoverable. This cloud data storage technology helps significantly improve the safety, integrity, and restoration of data fragments anywhere at any time.

The cloud is redundant in another way. Each piece of data fragment is storable in at least 3 separate locations. Should a hurricane destroy one of these data centers with some data fragment, there would be 2 other copies left for the user to fall back on. This redundancy helps make cloud data storage significantly more reliable and more robust, even in rare times of natural hazards and disasters. Most of the time, millions of hard-disk drives are spinning on standby just in case.

Many tech titans continue to work on making the cloud infrastructure more robust. One promising medium is glass. A fast and precise laser etches tiny dots in some multiple layers within platters of glass 75 millimeters square and 2 millimeters thick. In real time, the cloud stores data and information in the length, width, depth, size, and orientation of each dot. Encoding data and information in glass in this way can be the modern equivalent of etching in stone. At Microsoft, computer scientists and researchers start to harness this technology to build a brand-new cloud system out of glass. These computer scientists significantly broaden data storage capacity so that each slide can hold over 75 gigabytes of data. Further, their fellow researchers apply machine-learning algorithms to significantly improve online reading speed in these slides across the new cloud system. These slides can last for 10,000 years. Microsoft continues to develop a new cloud system that can handle thousands, or even millions, of these glass slides. Achieving this scale is necessary for tech titans such as Microsoft and Meta to rebuild a truly durable foundation for the new cloud. However, this foundation is only necessary but not sufficient. A sufficient condition is for several tech titans to supply continual flows of electric power to support cloud storage devices and data centers around the world. In practice, tech titans should move data substantially closer to their users in order to ensure faster and better AI business applications. In numerous cloud locations across geographic borders, the resultant energy efficiency gains scale up dramatically in a cost-effective manner.

The Internet is a global network of networks. Internet exchanges are the portals in support of many online networks that connect to one another worldwide. Switches connect Internet-connective mobile devices, such as smartphones and computers, within these networks. As the Internet has grown substantially, Internet exchanges and their switches have become more numerous and more impressive. In fact, this proliferation leads to multiple levels of high performance that would have been well beyond human control, intelligence, and comprehension with old technology.

Flashing green lights adorn the fiber-optic cables, and these cables connect to key servers in association with a wide range of Internet service providers (ISP), cloud services, and content distribution networks (CDN). During peak times, more than 4 terabits flow through each data center each second, equivalent to over a million HD movies streaming simultaneously (a terabit is one trillion bits, and there are 8 bits in a byte). For the New York Stock Exchange (NYSE), for instance, data often spreads across more than 4 data centers around the U.S. East Coast. These data centers connect over a thousand networks, pass the packets of video calls from a mobile-phone provider to another, and deliver data from TikTok to a local telecom operator so that an American teenager can numb his or her mind with continuous video clips, films, or movies. Hundreds of Internet exchanges simultaneously route web traffic around the world. The optimal traffic allocation conserves bandwidth, a measure of how much data a conduit, such as an optical fiber and a Wi-Fi channel, can handle at a given time. As a result, these Internet exchanges work together to reduce total workload or burden on smartphones, computers, servers, and cables. In combination, these Internet exchanges help shrink each cloud system’s latency, or the amount of time it takes for a click or a swipe on an Internet-connective mobile device to transmit to another such device, whether the mobile device belongs to a human user or sits in a data center. Today, virtual reality (VR) headsets and meta-verses need latencies far lower than the latencies that many business applications tolerate in practical terms. In due course, this business requirement induces data centers, servers, and telecom operators to bring more of the Internet’s capabilities closer to its edge users. With substantially more cloud computing power, many AI business applications have become better and faster by moving data significantly closer to their users in terms of cloud locations across geographic borders.

With greater cloud computing power, the edge is where the Internet meets the real world. At the edge of the Internet, users produce and consume new, raw, dynamic and static data. In the special place of each smartphone, computer, or other mobile device, the high-performance semiconductor microchips and graphical processing units (GPU) help process real-time AI business applications with ultra-low latencies. For some users, the local processing power is more than adequate and reasonable. For many other users, however, it is not enough to leverage the number-crunching capacity found in palms and pockets. It takes much more intensive computational power for software engineers, statisticians, and data scientists to train foundational AI models (such as Alexa, ChatGPT, Gemini, and Copilot), simulations for weather forecasts, target treatments in precision medicine, and computer graphics, images, and VR metaverses for movies and films, among many other business applications. In financial technology, specifically, econometricians borrow the techniques in prior cruise missile control systems and then apply and leverage the resultant recursive multivariate filter to screen more than 6,000 U.S. stock prices and returns in search of better buy-and-hold longer-run stock signals. With U.S. patent accreditation and protection for at least 20 years, these fintech network platforms provide proprietary alpha stock signals and personal finance tools for institutional investors and retail traders worldwide.

Today, the Internet often delivers latencies of about 65 milliseconds. Autonomous electric vehicles (EV), VR metaverses, large language models (LLM), online video games, and other AI software applications require lower latencies of no more than 20 milliseconds. This high-performance specification suggests distributing not only Internet connectivity and data storage throughout the global cloud system, but also applying significant loads of computational power. Without such technical progress, many AI business applications remain impractical, even though these applications might turn out to be massively popular, useful, and lucrative in due course.

Internet exchanges have already significantly reduced cloud latency over the years by getting faster at moving data and information between data centers around the world. The current state-of-the-art switches can now transmit 5 terabits per second from one data center to another. These switches increasingly spread out the loads of moving data worldwide. As a result, these switches help the Internet as a whole function in a more efficient manner. The U.S. tech conglomerate, Cisco, applies AI machine-learning algorithms to predict the optimal times of day and then routes for moving time-insensitive data in order to avoid traffic jams through the global cloud networks.

In practice, there are some technical ways for tech titans to move data from place to place faster. Notably one can increase the speed at which light travels through fiber-optic cables by hollowing out their cores. Light, and thus data and information, can travel almost 50% faster through air than light travels through standard glass. In December 2022, Microsoft acquired Lumenisity, a new tech startup specialty in hollow-core fiber technology. Lumenisity can help support Microsoft with significant competitive advantages and improvements in promoting much higher cloud speed and lower latency.

In theory, tech titans such as Microsoft, Amazon, and Google can simultaneously reduce load and latency even more by switching at the speed of light. Most Internet switches are electronic in form and nature (like most smartphones and computers). Packets of data can travel along optic fibers in the form of light, or more specifically, in photons. When a packet passes through a switch, as it may several times on its way from one user or server to another, this packet of data flip-flops from photons to electrons and then back into photons again. The transceivers that perform this conversion use up time and energy. Should Internet switches be photonic instead, the data might make the entire trip in the form of photons. This transformation can help improve both speed and latency across the whole Internet.

For the foreseeable future, the biggest reductions in load and latency almost surely arise from upgrades to Internet infrastructure on the edge. Early signs of this fresh potential relate to the CDNs and other online distributive networks. Indeed, these networks have been integral to the rapid growth of cloud video-streaming services such as Netflix, TikTok, and YouTube. These cloud services continue to be vitally essential to the new Internet of today and tomorrow. In the meantime, those CDNs and their successors need to start thinking more for themselves.

For AI business applications with loads of data from sensors, such as autonomous EVs, VR headsets, and many real-time smart-city applications, it makes sense for tech titans to bring computational heft as close as possible to the data, rather than lugging gigabytes of data to a central hub back and forth. A brand-new generation of CDNs can start to spring up in spots all around the world. These CDNs provide AI-driven business applications with the cloud edge-computing services that these AI tools require to perform well in real time.

From Meta and Microsoft to Nvidia and TSMC, some major cloud platforms attempt to deal with higher latencies, or lags and delays in online video games, by renting space in lots of smaller data centers substantially closer to the edge. This approach significantly shortens the distance that data has to travel between gamers and their games. This solution has become especially important for VR headsets and some adjacent AI software applications, where high latencies, lags, and delays can often lead to motion sickness, misinformation, and many other types of dissatisfaction.

Cloud computing in light would help speed up the design and development of many new AI software applications. Training each neural network requires moving tera-bytes of data from online storage to AI semiconductor microchips and GPUs. It can take tech titans significant amounts of time and energy to process large-scale data through the neural network, from neuron to neuron, from layer to layer. Microchips and GPUs are now so fast that it is impossible to feed them enough data within a short time frame to fully take advantage of their processing capabilities. The speed of data transfer often confronts the physical limits of sending electrons over copper wires, as well as the physical limits of converting photons into electrons back and forth.

All of these efforts can help save huge amounts of time, heat, and energy. It would make the cloud far more robust, more powerful, and more efficient than it is today. However, that technology is speculative and remains a long way off. Optical cloud computing technology has been the wildest dream for tech titans for many decades. In the meantime, the prior and current builders of the Internet continue to work on many other ways, models, and techniques to make the cloud whir more efficiently. Without these advances, the environmental consequences of the cloud might raise doubts about how well the planet can cope physically with the Internet.

In recent years, data centers use between 240 and 500 terawatt-hours of electricity per year, approximately 1.5% to 2% of total electricity consumption worldwide. This figure has risen quite dramatically from less than 0.5% almost 25 years ago. At the low end of estimates, this power consumption is more than the power consumption in Australia. At the high end of estimates, this power consumption is more than the power consumption in France, one of the top 10 consumers of electricity worldwide. As AI software applications continue to proliferate in the next few decades, we can expect the power consumption of data centers to surge exponentially in due course.

Growing demand for digital experiences means more and bigger data centers. In recent times, Amazon, Google, Meta, and Microsoft collectively use up 72 terawatt-hours of electricity each year. This amount is more than double their usage almost 7 years ago in accordance with a recent survey by the International Energy Agency (IEA). Today, these 4 tech titans account for 80% of global hyperscale data-center capacity. A huge chunk of the rest goes to the Chinese tech titans Alibaba, Tencent, Baidu, and ByteDance, the parent company of TikTok. Another Chinese tech giant and cloud service provider, Huawei, is among the main cloud operators, along with the major state enterprises China Telecom and China Mobile.

Outside China, the pace of data-center construction has been taxing resources to the point that some governments have felt both the pressure and obligation to slow down this pace. For example, Singapore chose to temporarily halt the construction of new data centers because the government has to deal with the far-flung concern that building more may make it hard to fulfill its long-term commitment to achieving net-zero carbon emissions by 2050. In Ireland, the national electricity utility, EirGrid, has stopped issuing connections to new data centers until 2028 out of concern for running out of grid-connective energy capacity. Now data centers already account for almost 20% of electricity consumption there.

In fact, these pauses have not stopped data-center growth, but rather have shifted this growth elsewhere. More data centers continue to pop up in secondary markets, such as Maryland and Malaysia, that have fewer energy-resource constraints. As data-center activity grows in these regions, the governments would have to step in to protect their electric power grids. Despite all these pauses and constraints, the Internet’s use of electricity has been efficient in recent years. From 2015 to 2024, the number of Internet users surged by almost 80%, global Internet traffic by more than 600%, and data-center workloads by 350%. However, the total electric power consumption by these data centers rose by only 25% to 75%.

These energy efficiency gains arise partly from significant improvements in cloud computation. For decades, the energy requirement for the same amount of cloud computation has fallen by almost half every 2.5 years (Koomey’s Law). The energy efficiency gains further emerge from data centers as they have grown in size with increasingly greater fractions of their energy use allocation for cloud computation.

In the meantime, a major business need is long-term power storage. This business need literally means saving energy for a rainy day. When a power grid fails, some data center runs on lithium-ion batteries for a few minutes until methane generators kick in. Google, Amazon, Meta, and Microsoft now attempt to invest in alternative power storage technology. For instance, hydrogen power plants help park a lot of renewable energy into a small space. In theory, these hydrogen power plants can further support the average data center for days instead of minutes. Data centers can help alleviate the problem of variable load that afflicts renewable power grids. In practice, data-center operators can shift their workloads by moving some tasks, such as training an AI model and applying machine-learning algorithms to predict weather events, to different data centers. High energy efficiency gains can emerge from the optimal allocation of time-insensitive data tasks. Specifically, Google has built a new system that helps shift computational tasks to the open time slots when the power grids are cleanest. Further energy efficiency gains result from this smart allocation of data tasks to off-peak hours.

Key tech titans further play a prominent role in another climate-friendly technology: durable carbon-dioxide removal (CDR). CDR involves taking carbon dioxide from the atmosphere and then storing this gas somewhere in a safe way that keeps the gas from ever getting back there. In recent years, Microsoft pursues the new CDR technology to help achieve the longer-run goal of going carbon-negative.

These disruptive innovations help explain why some tech titans make big promises about their longer-term environmental sustainability. Google and Equinox, another cloud operator of hyperscale data centers, seek to run completely on carbon-free energy by 2030. Also, Microsoft commits to becoming carbon-negative and water-positive through hydrogen power plants by 2030. Amazon attempts to run its own data centers on 100% renewable energy by 2025 in the current pursuit of net-zero carbon emissions by 2040. Apple now commits to becoming 100% carbon-neutral by 2030 across its global supply chains and data centers, through a combination of reducing carbon emissions by 55%-75% and boosting carbon removal by 35%. Meanwhile, Meta seeks to achieve net-zero carbon emissions by 2035.

Bringing more cloud computing power closer to the user means many more smaller data centers in the major cities and regions. Around the world, these data centers continue to enjoy energy efficiency gains and scale-and-scope economies across the major continents. As some recent surveys show, the edge data-center market can likely more than triple in value globally to about $30 billion to $35 billion by the end of the current decade.

Another major factor is AI’s ravenous appetite for energy. Today, AI requires lots more calculations than basic and regular computational tasks do. A rack of normal servers might run on 7 kilowatts of electricity, whereas, AI racks would run on 30-100 kilowatts of electricity. The simple reason for this discrepancy is that AI applies much more powerful cloud hardware than basic cloud hardware for photo storage and website maintenance. Because AI often involves complex matrix mathematics and parallel computing vectors, AI involves large blocks of computation all done at once. For many AI software applications, lots of transistors have to change states fast within a short time frame. Hence, AI draws a lot more electric power than most normal computer tasks, the latter of which flip far fewer transistors at once for each typical calculation.

Computer scientists and software engineers may train each large language model (LLM) for weeks on tens of thousands of power-hungry special AI servers, GPUs, and semiconductor microchips. As AI foundational models become more complex, they consume more energy resources in due course. Should we compare apples with oranges, it costs OpenAI more than 50 gigawatt-hours of electricity to train its ChatGPT-4, or 0.02% of the amount of electricity consumption for California each year. This amount is more than 50 times more electricity than the amount of power consumption for training the predecessor ChatGPT-3.

A recent survey by Nvidia (a global manufacturer of the most popular AI microchips) shows that the total energy costs of applying AI models in practice would dwarf the costs of training these AI models. It is no coincidence that AI tech titans happen to be the biggest cloud operators of data centers worldwide. The future of AI depends on whether tech titans can manage to build out the physical cloud infrastructure to support both near-term and longer-run AI technological advancements. Microsoft continues to invest heavily in OpenAI’s next research workstreams. As a key cloud operator, Microsoft plans to spend more than $50 billion capital expenditures each year on the global cloud infrastructure from 2024. Also, Meta plans to spend more than $30 billion on data centers each year in the current decade. A new startup for AI cloud infrastructure, CoreWeave, has grown dramatically from only 3 to 15 data centers in North America in recent years. This cloud startup plans to further expand to many other parts and regions of the world. Another cloud startup IonQ continues to provide high-end quantum computing services in support of cloud maintenance for Amazon, Google, Meta, and Microsoft.

The submarine cable system carries almost 85% of intercontinental Internet traffic. A subsea fiber-optic cable gets cut almost every week often because of an anchor dragging across the ocean floor. However, the Internet rarely misses a mouse click for 2 physical reasons. First, there is more than enough redundancy in most places to make up for potential cable failures with more than 550 subsea cables spanning 1.4 million kilometers. Second, a massive fleet of 60 repair ships are on stand-by to fix broken cables around the world. For these reasons, the Internet continues to be intercontinentally potent, robust, and resilient with diverse cloud connections.

Fiber-optic cables run along numerous routes around the world. This diversification helps provide the Internet with many options for directing web traffic worldwide. In most cases, if one path fails, alternative packets of data can find their way around. This rare unique feature is part of cloud abstraction.

Whenever a subsea cable is broken somewhere in the world, one of the main cloud operators dispatches a repair ship to fix this problem. A pulse of light may be sent down the line to help pinpoint a particular rupture in a subsea fiber-optic cable that may be a few centimeters thick. The repair ship applies sonar and a remote vehicle to find the break. In time, the repair ship hauls the ends of the cable onboard. There fiber-optic cable specialists splice on a new section of fiber with precision. The task is like trying to align 3 different pieces of hair. These specialists operate the remote vehicle to bury the new fiber-optic cable at the bottom of the ocean. In most cases, the vast majority of cloud users have totally no idea that a cable was broken in the first place. These repair jobs generally take only a few days. During this time, cloud data centers automatically re-route some packets of data over the Internet anyway. Across the cloud data centers worldwide, the long prevalent redundancy becomes useful in this technical sense.

It can be quite expensive for tech titans and cloud operators to lay fiber-optic cables. In the past, telecom companies such as AT&T and Verizon formed consortiums to pay for the task. Now cloud tech titans such as Google, Microsoft, and Amazon do so. Their hyperscale data centers require massive amounts of Internet connectivity to keep the cloud up-to-date. These cloud tech titans pursue this predictability that ownership ensures in due course.

Google provided financial support for its own first transpacific subsea cable system back in 2008. Since then, Google has invested in more than additional 25 subsea cable systems around the world. In terms of complete ownership, Google owns 12 of these subsea cable systems worldwide. Microsoft owns 5 subsea cable systems across the continents. Alcatel Submarine Networks, one of the 3 major submarine fiber-optic cable manufacturers, suggests that the major cloud tech titans (Amazon, Google, Microsoft, Cisco, and Oracle etc) support more than 65% of current cable projects around the world. This vertical integration helps these tech titans expand the current cloud Internet with more diverse cloud connections across all of the key continents. As a result, the cloud space can become more intercontinentally potent, robust, and resilient in light of geopolitical tensions. As the global cable map further diversifies physically, the resultant cloud landscape concentrates corporately.

The bigger question pertains to which tech titans both own and control the physical cloud connections of the Internet. For some tech titans, this topic tends to become more urgent in times of real-world wars and conflicts (from the Russia-Ukraine war in Eastern Europe to the simultaneous conflict between Israel and Hamas and the Palestinians in the Middle East). As the Internet becomes increasingly central and essential to modern life, it has become an urgent necessity for tech titans to further secure numerous cloud connections and fiber-optic cable systems worldwide.

Data and information can travel not only through fiber-optic cables but also through air and space. This vital redundancy helps significantly improve cloud resilience at times of cable failures. Also, satellite Internet serves as good news to users in poor and remote parts of the world. Elon Musk’s StarLink runs more than 5,000 satellites in orbit, with several thousands more new satellites ready for launch by 2030. Musk reiterates that he aims to build 42,000 satellites as part of the longer-run project at StarLink. In the meantime, StarLink claims 2 million users worldwide. Jeff Bezos’s Amazon plans to launch more than 3,000 satellites with Project Kuiper. Also, a new British aerospace startup, OneWeb, currently operates 650 satellites in orbit. More satellites continue to arise as China and India seek their own aerospace capacity.

Satellites in low-Earth orbit (LEO), or satellites only a few hundred kilometers away from Earth’s surface, offer much faster, safer, and more reliable cloud connections than old satellite connections did in the mid-1990s. LEO satellites are good enough to help stream Netflix films and movies in a remote mountain cabin, although these satellites are not as lightning-quick as subsea fiber-optic cables. LEO satellites can move across the sky quickly from the point of view of an Earth-bound user as these satellites zip in and out of range of receivers on the ground. For this reason, LEO satellites require antennae that help track these satellites as they pass overhead. These antennae recognize when an LEO satellite moves out of range. At the right time, these antennae help identify and switch to the next nearest LEO satellite that has come into the picture. Software helps facilitate these handoffs so that the user never perceives any decline in global cloud service performance.

This redundancy becomes vitally important if the subsea fiber-optic cable systems come under severe stress from rare climate risk events, wars, or other geopolitical tensions. In recent years, heavy Israeli bombardment caused widespread Internet blackouts in Gaza. Ukraine’s army relied heavily on StarLink in its current war with Russia. In a potential invasion of Taiwan by Mainland China, the abstract question of who owns the Internet would become a concrete geopolitical crisis.

Much of the Internet relies heavily on cloud fortresses and hyperscale data centers built by some tech titans such as Amazon, Apple, Meta, Microsoft, Google, Cisco, and Oracle. Smart data creation, storage, and analysis have become increasingly vital and dependent on the world cloud infrastructure. Many hospitals use the cloud to access patient records and test results. Governments use the cloud for elections, social services, and national disaster alerts. The global financial system continues to rely heavily on the cloud in support of bank transfers, real-time stock trades, and email payments. Due to the abstract nature of cloud services, no single data center holds all of the world’s valuable online assets. No single fiber-optic cable connects the entire Internet. No single hack would wipe out all user data worldwide. As the Internet continues to integrate into virtually every aspect of modern life, it is vitally important and essential for many governments and tech titans to keep the current cloud infrastructure worldwide secure and robust.

The global expenditure on cyber security is likely to surpass $200 billion per annum in recent years. Physical security for cloud servers and data centers is a major part of that bill. At some tech titans such as Google, Meta, and Microsoft etc, computer scientists, econometricians, and software engineers each keep a physical piece of identifiable hardware that they must use to log on. Cyber security protocols require online data encryption, authenticate cloud servers, and verify proper access rights for individual users.

In addition to stealing proprietary data, hackers can further try to paralyze the world cloud systems in support of the Internet worldwide. On October 10, 2023, Amazon, Google, Microsoft, and Cloudflare, each disclosed that they had been the target of a colossal distributive denial-of-service (DDOS) attack in August and September. Specifically, Google reported receiving 398 million requests for data each second during the 2-minute DDOS attack, more than 4,000 times the normal rate at which search queries usually flow through the Internet. This DDOS attack was 7.5 times larger than the previous record.

The world cloud services eventually weathered the storm. This fact is one more of the fundamental forces that continue to push the Internet toward a more integrative business model. In the new model, some tech titans operate from fiber-optic cables and cloud servers to fulfill both business applications and many other user needs reasonably near the edges of the Internet worldwide. As a result, these tech titans insulate their cloud services from any future cyber security threats like the previous episode of DDOS attacks. Meanwhile, these tech titans continue to spend heavily to distribute cloud computing power to the edges of the Internet. This cloud capital spending boom continues to push an evolution from electronics to photonics at the speed of light. At the same time, these tech titans rely heavily on hydrogen power storage, carbon removal, nuclear power generation, and other electric capacity to provide reliable renewable energy in support of their cloud services worldwide. As this cloud infrastructure allows computation to be ever more distributive, these tech titans and cloud operators can continue to grow exponentially their AI applications in due course. Many layers of the world cloud infrastructure help expand what can be made digitally viable from electric vehicles (EV) and virtual reality (VR) headsets to generative artificial intelligence (Gen AI) and the metaverse. In the Internet, all sorts of technological advancements support the next dual waves of both business model transformations and digital revolutions. Today, these advancements shine fresh light on how AI-driven cloud services help accelerate the new generation of disruptive innovations in trade, finance, and technology. Every new business can become an AI cloud service provider.

As of mid-2025, we provide our proprietary dynamic conditional alphas for the U.S. top tech titans Meta, Apple, Microsoft, Google, and Amazon (MAMGA). Our unique proprietary alpha stock signals enable both institutional investors and retail traders to better balance their key stock portfolios. This delicate balance helps gauge each alpha, or the supernormal excess stock return to the smart beta stock investment portfolio strategy. This proprietary strategy minimizes beta exposure to size, value, momentum, asset growth, cash operating profitability, and the market risk premium. Our unique proprietary algorithmic system for asset return prediction relies on U.S. trademark and patent protection and enforcement.

Our unique algorithmic system for asset return prediction includes 6 fundamental factors such as size, value, momentum, asset growth, profitability, and market risk exposure.

Our proprietary alpha stock investment model outperforms the major stock market benchmarks such as S&P 500, MSCI, Dow Jones, and Nasdaq. We implement our proprietary alpha investment model for U.S. stock signals. A comprehensive model description is available on our AYA fintech network platform. Our U.S. Patent and Trademark Office (USPTO) patent publication is available on the World Intellectual Property Office (WIPO) official website.

Our core proprietary algorithmic alpha stock investment model estimates long-term abnormal returns for U.S. individual stocks and then ranks these individual stocks in accordance with their dynamic conditional alphas. Most virtual members follow these dynamic conditional alphas or proprietary stock signals to trade U.S. stocks on our AYA fintech network platform. For the recent period from February 2017 to February 2024, our algorithmic alpha stock investment model outperforms the vast majority of global stock market benchmarks such as S&P 500, MSCI USA, MSCI Europe, MSCI World, Dow Jones, and Nasdaq etc.

With U.S. fintech patent approval, accreditation, and protection for 20 years, our AYA fintech network platform provides proprietary alpha stock signals and personal finance tools for stock market investors worldwide.

We build, design, and delve into our new and non-obvious proprietary algorithmic system for smart asset return prediction and fintech network platform automation. Unlike our fintech rivals and competitors who chose to keep their proprietary algorithms in a black box, we open the black box by providing the free and complete disclosure of our U.S. fintech patent publication. In this rare unique fashion, we help stock market investors ferret out informative alpha stock signals in order to enrich their own stock market investment portfolios. With no need to crunch data over an extensive period of time, our freemium members pick and choose their own alpha stock signals for profitable investment opportunities in the U.S. stock market.

Smart investors can consult our proprietary alpha stock signals to ferret out rare opportunities for transient stock market undervaluation. Our analytic reports help many stock market investors better understand global macro trends in trade, finance, technology, and so forth. Most investors can combine our proprietary alpha stock signals with broader and deeper macro financial knowledge to win in the stock market.

Through our proprietary alpha stock signals and personal finance tools, we can help stock market investors achieve their near-term and longer-term financial goals. High-quality stock market investment decisions can help investors attain the near-term goals of buying a smartphone, a car, a house, good health care, and many more. Also, these high-quality stock market investment decisions can further help investors attain the longer-term goals of saving for travel, passive income, retirement, self-employment, and college education for children. Our AYA fintech network platform empowers stock market investors through better social integration, education, and technology.

Andy Yeh (online brief biography)

Co-Chair

AYA fintech network platform

Brass Ring International Density Enterprise ©

Do you find it difficult to beat the long-term average 11% stock market return?

It took us 20+ years to design a new profitable algorithmic asset investment model and its attendant proprietary software technology with fintech patent protection in 2+ years. AYA fintech network platform serves as everyone’s first aid for his or her personal stock investment portfolio. Our proprietary software technology allows each investor to leverage fintech intelligence and information without exorbitant time commitment. Our dynamic conditional alpha analysis boosts the typical win rate from 70% to 90%+.

Our new alpha model empowers members to be a wiser stock market investor with profitable alpha signals! The proprietary quantitative analysis applies the collective wisdom of Warren Buffett, George Soros, Carl Icahn, Mark Cuban, Tony Robbins, and Nobel Laureates in finance such as Robert Engle, Eugene Fama, Lars Hansen, Robert Lucas, Robert Merton, Edward Prescott, Thomas Sargent, William Sharpe, Robert Shiller, and Christopher Sims.

Free signup for stock signals: https://ayafintech.network

Mission on profitable signals: https://ayafintech.network/mission.php

Model technical descriptions: https://ayafintech.network/model.php

Blog on stock alpha signals: https://ayafintech.network/blog.php

Freemium base pricing plans: https://ayafintech.network/freemium.php

Signup for periodic updates: https://ayafintech.network/signup.php

Login for freemium benefits: https://ayafintech.network/login.php

If any of our AYA Analytica financial health memos (FHM), blog posts, ebooks, newsletters, and notifications etc, or any other form of online content curation, involves potential copyright concerns, please feel free to contact us at service@ayafintech.network so that we can remove relevant content in response to any such request within a reasonable time frame.

2017-03-15 08:46:00 Wednesday ET

The heuristic rule of *accumulative advantage* suggests that a small fraction of the population enjoys a large proportion of both capital and wealth creatio

2018-11-09 11:35:00 Friday ET

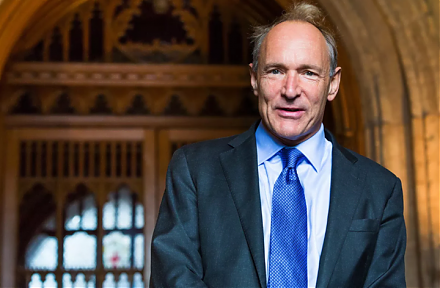

The Internet inventor Tim Berners-Lee suggests that several tech titans might need to be split up in response to some recent data breach and privacy concern

2017-11-23 10:42:00 Thursday ET

As the TV host of Mad Money, Jim Cramer provides 5 key reasons against the purchase and use of cryptocurrencies such as Bitcoin. First, no one knows the ano

2023-03-21 11:28:00 Tuesday ET

Barry Eichengreen compares the Great Depression of the 1930s and the Great Recession as historical episodes of economic woes. Barry Eichengreen (2016)

2022-11-15 10:30:00 Tuesday ET

Stock market misvaluation and corporate investment payout The behavioral catering theory suggests that stock market misvaluation can have a first-order

2017-06-03 05:35:00 Saturday ET

Fundamental value investors, who intend to manage their stock portfolios like Warren Buffett and Peter Lynch, now find it more difficult to ferret out indiv